PICK YOUR SUPPORT STYLE

MONTHLY SUPPORT

Reader

$5/mo

Contributor

$15/mo

Architect

$50/mo

Recurring subscriptions auto-bill monthly via Stripe Checkout. Cancel anytime from the receipt email.

The best local model

Z.ai releases the best open source model you can run at home, Devin shows us the future of code review, and Suno at home

tl;dr

- Z.ai releases the best model for running at home

- Devin shows us the future of code review

- Do we finally have Suno at home?

Releases

GLM 4.7 Flash

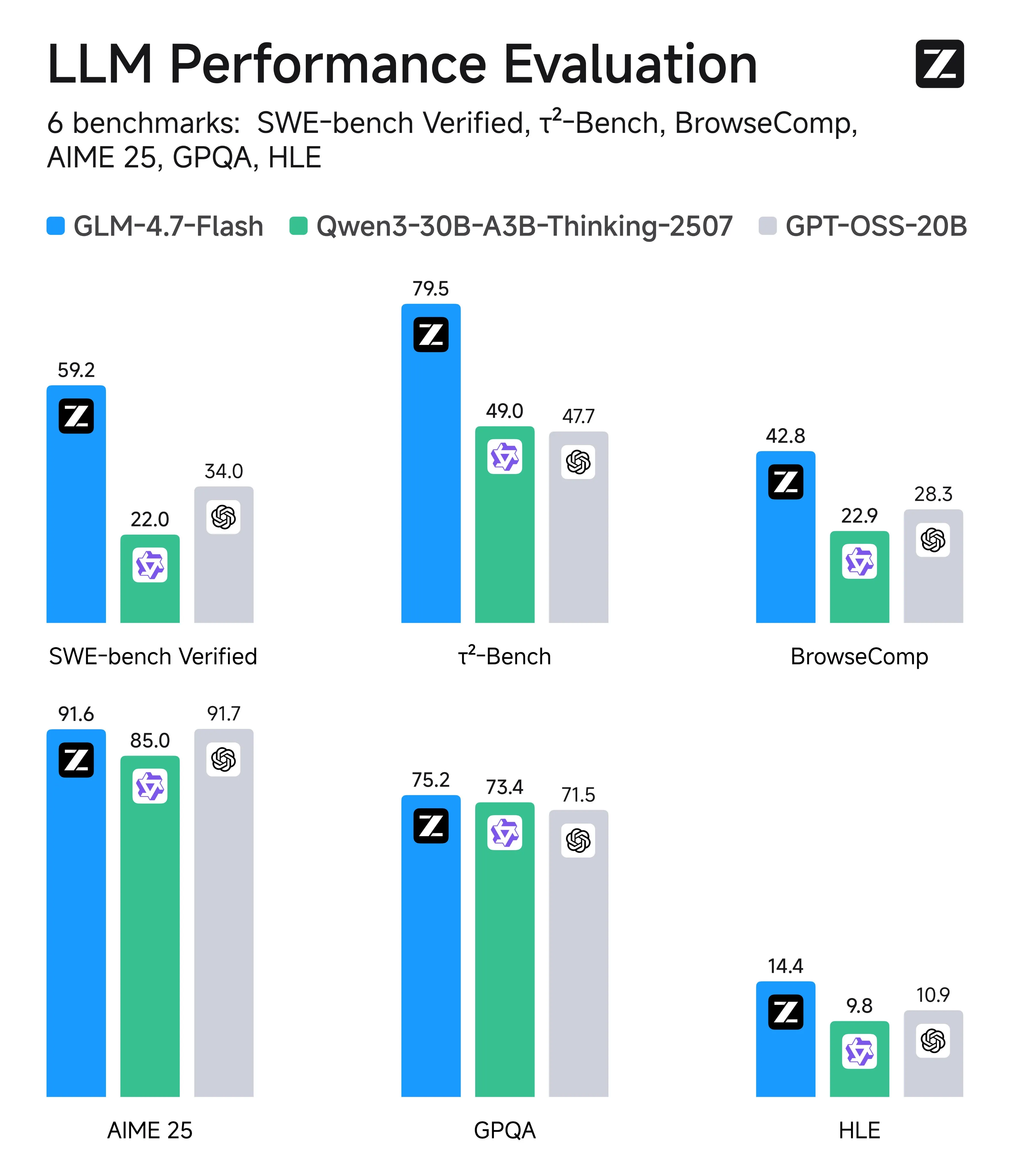

Z.ai has released the smaller version of their very strong GLM 4.7 model, called GLM 4.7 Flash. The model is a 30 billion parameter mixture of experts model, making it ideal to run locally (if you have >24GB of CPU and GPU memory).

Its performance is frankly ridiculous for its size. Its SWE-Bench verified score is better than Sonnet 3.6, the model that started the vibe coding revolution. This means that in about a year, we have been able to compress a frontier model down into something many people can run at home on their own hardware.

It does not only perform well on benchmarks. I have started using the model locally, and it is the first local model that seems to have an intuitive understanding of when to use tools for real world tasks. Most open source models that I run need to be begged to call a tool, and when they do they tend to only call it once, even if it fails or the right information is not returned.

GLM 4.7 Flash doesn’t have this issue, making it extremely usable for day to day agent tasks. I can see it replacing all of my non-coding AI usage in the future as I build out the tools and harness around it.

If you are interested in running or using local models, you should be required to try out GLM 4.7 Flash. If you want to run it, I would look at using llama.cpp as it is fast and supported across all systems.

Devin Code review

Code review on Github feels antiquated now in the age of AI, as code review becomes the major time sink for humans when coding. The folks over at Cognition, the company behind Devin, thought so as well and have made their own AI powered PR review platform that they are offering for free right now, called Devin Review.

Instead of listing files and changes alphabetically or by line number, Devin Review instead groups them together in terms of logical features for you to be able to quickly look through and see all the related code right next to each other. It also goes and summarizes and explains what the code is doing as well. It can flag potential things that are missing and also report any bugs that it finds as well in this view.

It also has other nice-to-haves, like proper display of when code is moved. Normally on GitHub, you just see code getting deleted and then a new chunk appears somewhere else in another file that is the exact same duplicate code, but you don’t see any indication that those two things are identical or are linked in any way. In Devin, it shows you the relationship between this code, which makes it very easy to see when things are refactored.

There are many tools trying to revolutionize the code review space in the age of AI, but all of them previously have been coupled to GitHub. Devin Review is the first one that I have seen that seems to offer a more modern approach to code review, and I expect to see many more companies and products like this in the future.

Quick Hits

Qwen3 TTS

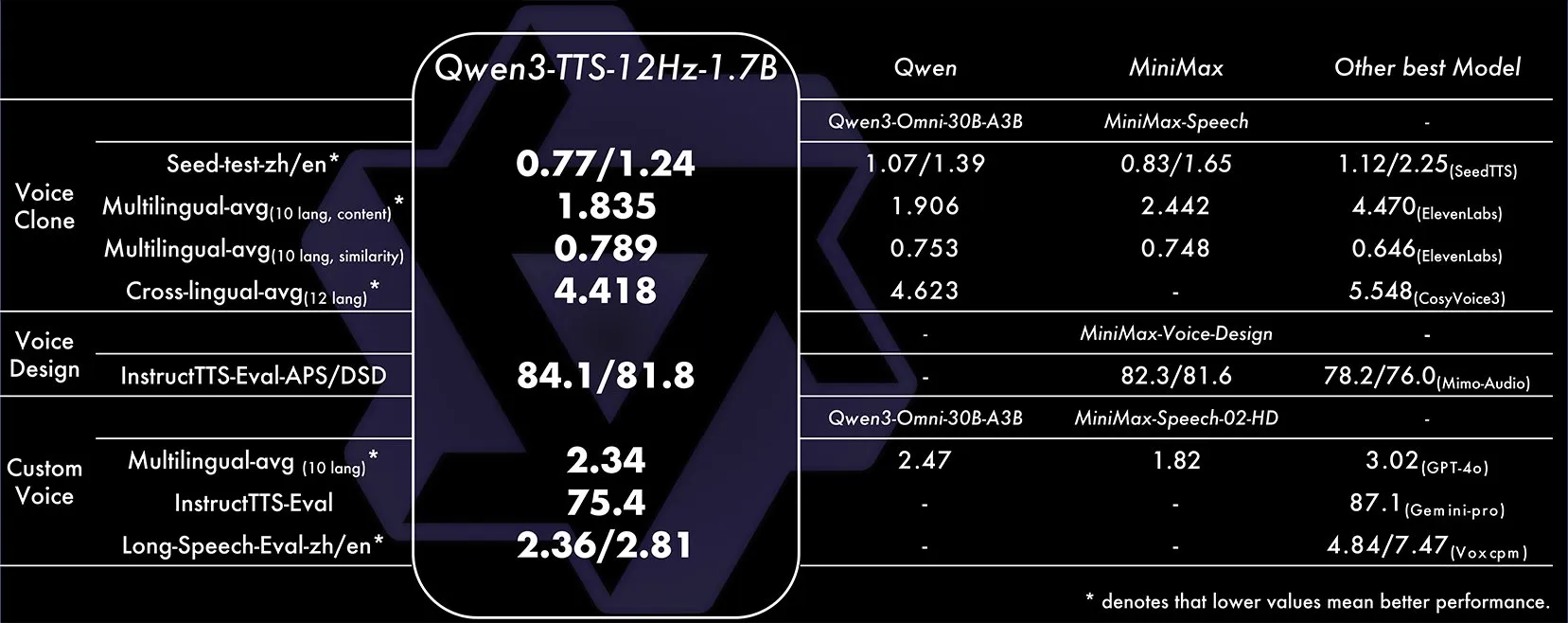

Qwen has had their own text-to-speech model for a while now, but it was closed source and you had to pay through their API to be able to go and use it. That was until this week, when they open-sourced their Qwen3 TTS model.

In terms of raw voice quality and clarity, it is not at the same level as Eleven Labs or Chatterbox TTS. But where it does excel is emotional voice control. You are able to give a text description of the speaker and the way they talk, and then also a description for the way you would like the text you are passing into the model to be described as well. This allows it to be one of the most expressive text-to-speech models out there, which you can now run for yourself at home.

It comes in two sizes, 600 million parameters and 1.7 billion parameters, both of which are feasible to run on edge devices although not always at real time. You can try it right now on Huggingface.

Video Generation Skills

Skills are markdown files that allow an LLM to easily learn new concepts. Usually, these relate to code or some other text-based task. This week, though, we got two new skills made by the community that are for video generation with LLMs, being the Remotion and Manim skills.

Remotion is a React based animation library, which is useful because LLMs already know React coding very well.

Manim is a python library that you have probably seen before, as it is what 3Blue1Brown uses to animate their videos.

If you are interested in making videos or animations with LLMs I would recommend adding these skills, as they make it very easy to do.

Suno at home?

Suno has been at the top of the AI text to song world for a while now, with no real competitors especially in the open source world.

That changed this week, as a group of researchers has released HeartMuLa (Heart Music Language model), which has a similar song creation experience to Suno and has quality that is comparable.

Before, none of the open source music generation models were able to make anything that I would consider listening to, but HeartMuLa is different. It is able to make full length songs that are cohesive, based on your text description of the song characteristics. It also allows you to input the lyrics and the structure of the song as well.

The full sized 7 billion parameter model has yet to be released, but they have dropped the 3 billion parameter model, which to me sounds similar to Suno V3. The general consensus seems to be that it is fairly good, although one downside I have been seeing people say is that it does not have a large amount of diversity for song styles.

If you are interested in testing it, you can check out the demo for it on Huggingface, or go to their Github if you want to run it yourself.

Finish

I hope you enjoyed the news this week. If you want to get the news every week, be sure to join our mailing list below.

Stay Updated

Subscribe to get the latest AI news in your inbox every week!